Smart Sign Language Glove Translator

An ESP32-powered wearable device that translates sign language gestures into spoken or text-based output. It utilizes a K-Nearest Neighbors (KNN) algorithm for real-time gesture classification and features a web-based 3D visualization tool built with Three.js.

Bridging Communication Gaps with Wearable Tech

The Smart Sign Language Glove is an innovative embedded system designed to facilitate communication for individuals who use sign language. By combining wearable sensors with machine learning, the project translates physical hand gestures into digital text or spoken words in real-time. This project demonstrates a sophisticated integration of embedded hardware, asynchronous web communication, and edge computing.

Technical Architecture

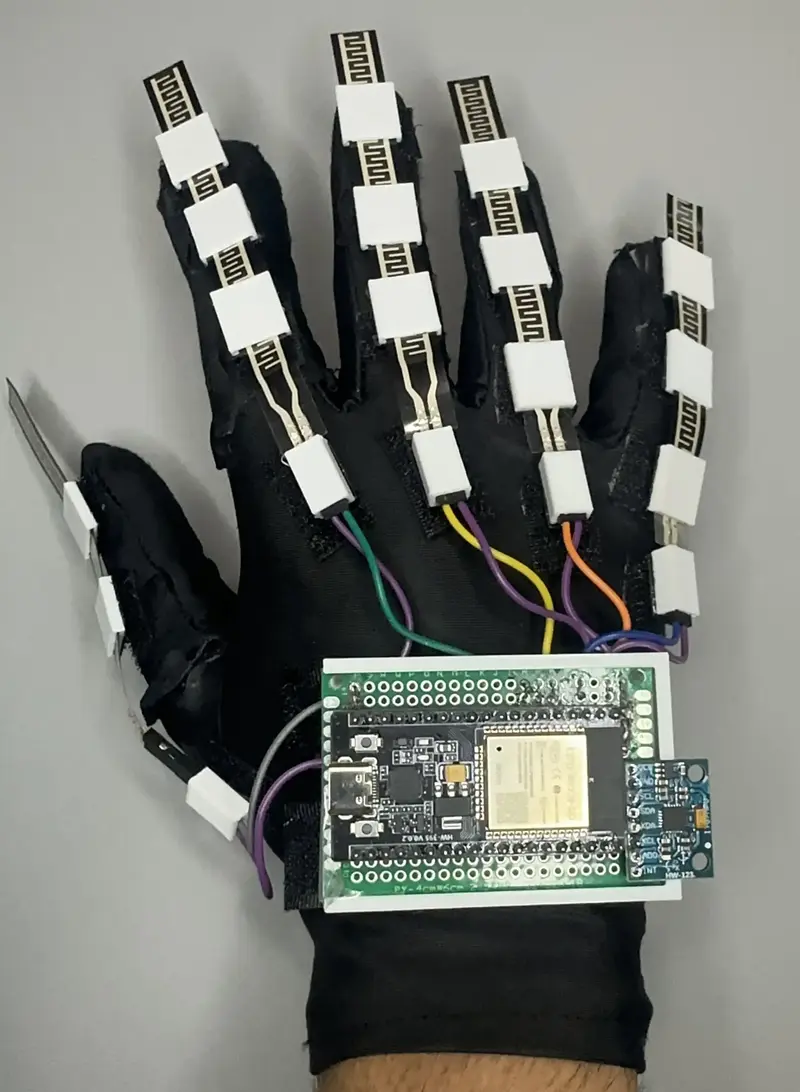

At the heart of the system is an ESP32 microcontroller, which serves as the central processing unit. The glove is equipped with five flex sensors (variable resistors) to monitor finger movement and an MPU6050 gyroscope/accelerometer module to track hand orientation and motion.

The firmware is built using the Arduino framework within the PlatformIO ecosystem. It leverages the LittleFS filesystem to manage web assets and configuration data, while the ESPAsyncWebServer library handles high-performance, non-blocking communication between the glove and a connected web client.

Machine Learning on the Edge

Gesture recognition is handled locally on the ESP32 using a K-Nearest Neighbors (KNN) algorithm. The system captures sensor data in three-second windows to record the full range of motion for specific phrases. Before classification, the data undergoes a normalization process to ensure consistency regardless of the user’s hand size or movement speed.

The KNN model calculates the Euclidean distance between the current gesture’s feature vector and stored training data. To maintain high reliability, the system only accepts predictions with a confidence score of 80% or higher. This approach allows for a responsive and accurate translation experience without requiring a constant connection to a heavy cloud-based AI backend.

Real-Time 3D Visualization and Web Interface

One of the project’s standout features is its interactive web interface. The ESP32 acts as a web server, serving a JavaScript-based client that utilizes the Three.js library. This client renders a dynamic 3D hand model that mirrors the user’s physical movements in real-time.

Key web technologies used include:

- Server-Sent Events (SSE): Used for one-way, real-time data streaming from the ESP32 to the browser, allowing the 3D model’s bones to rotate and flex instantly as the user moves.

- Web Speech API: Converts the predicted gesture labels into audible speech, providing immediate feedback for the translation.

- GLTFLoader: Loads a detailed 3D hand armature, which is then animated using GSAP for smooth transitions between poses.

Hardware Implementation

The hardware is designed for portability and ease of use. The components are soldered onto a compact 4cm x 6cm PCB, which is mounted to the glove using 3D-printed parts and velcro. The circuit includes a voltage divider network for the flex sensors and an I2C interface for the MPU6050. While currently powered via a wired connection during the development phase, future iterations aim for complete independence with external power supplies and wireless firmware updates.

Future Development

The project roadmap includes transitioning from KNN to more complex neural networks to further increase prediction accuracy. Additionally, the developers plan to implement a dual-hand system to support more complex sign language vocabularies, making the tool even more versatile for real-world communication.